What is Sora? An AI model from text to video AI?

We are training AI to comprehend and replicate the dynamics of the physical world, aiming to develop models that assist individuals in tackling challenges necessitating real-world engagement.

This AI model, named after the Japanese word for "sky," can create realistic and imaginative videos from text descriptions.

We are training AI to comprehend and replicate the dynamics of the physical world, aiming to develop models that assist individuals in tackling challenges necessitating real-world engagement.

Today marks a significant milestone as Sora steps into the limelight, extending its reach to red teamers for pinpointing potential risks and vulnerabilities in critical domains. But that's not all. We're also extending an invitation to visual virtuosos, design mavens, and cinematic storytellers to partake in shaping the evolution of Sora, ensuring it becomes an indispensable tool for creative professionals.

By opening the doors to our research endeavors ahead of schedule, we're not just inviting collaboration; we're fostering a dynamic exchange of ideas with individuals beyond the realms of OpenAI. This isn't just about unveiling the latest in AI prowess; it's about igniting imaginations and illuminating the possibilities that lie ahead.

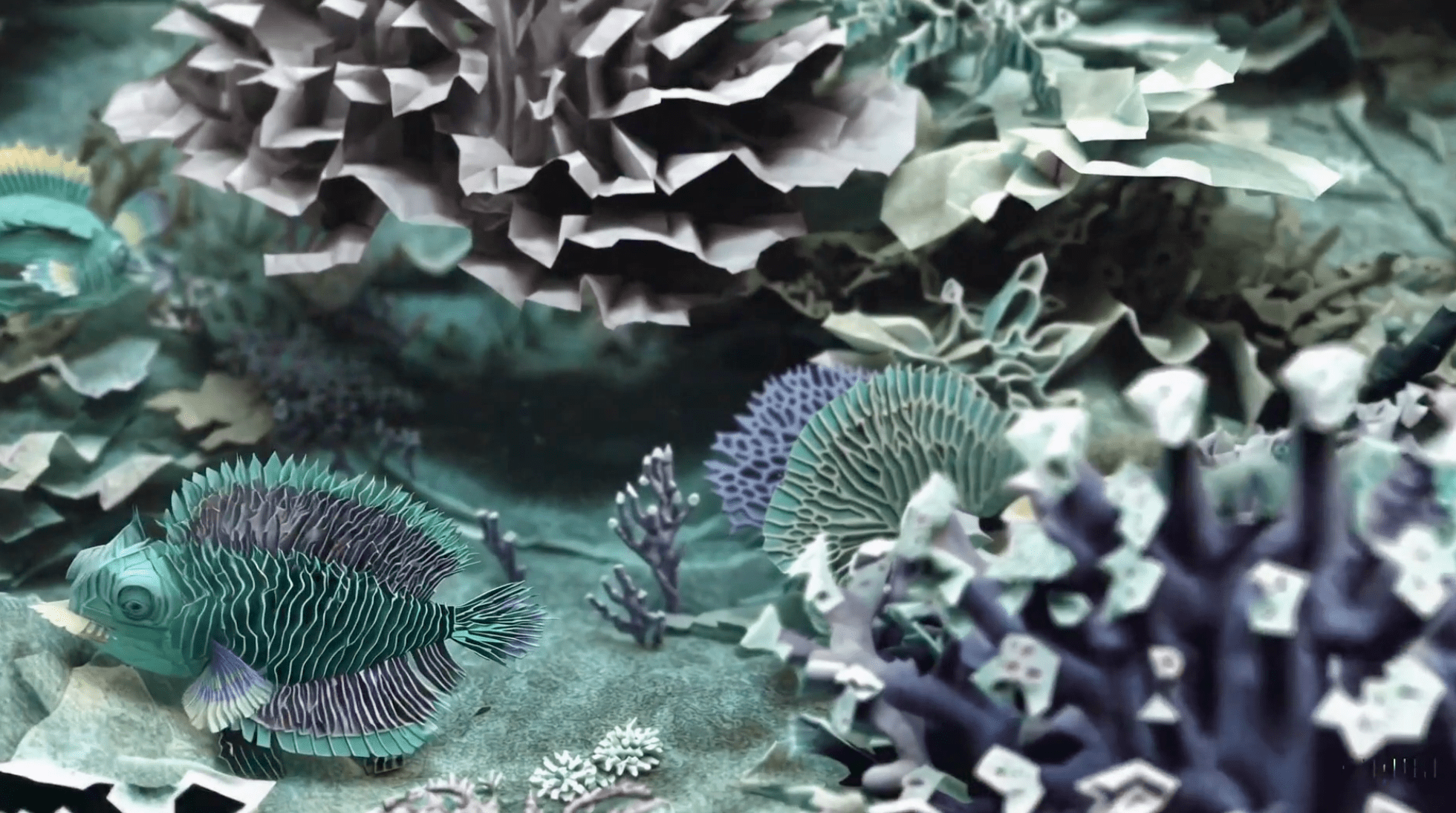

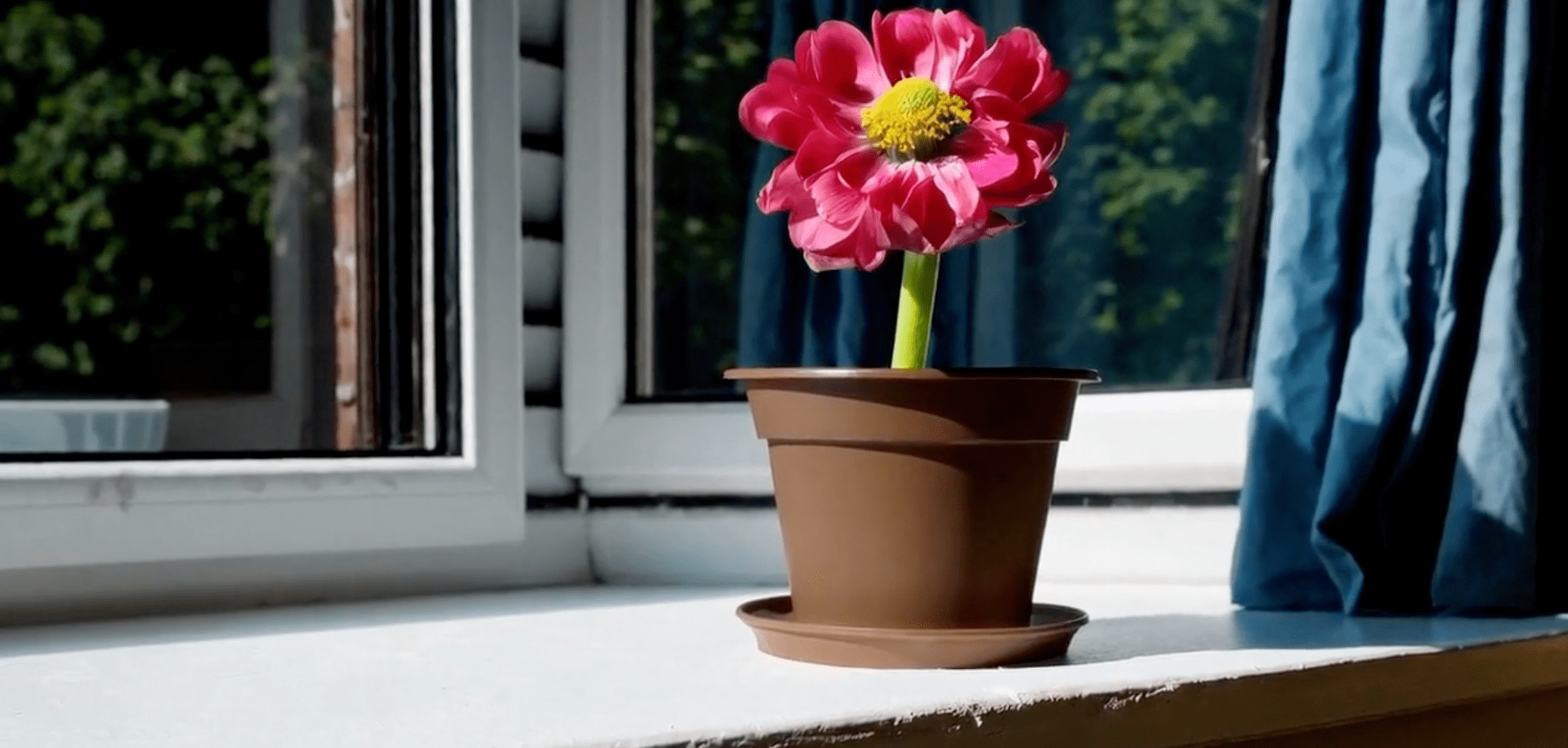

Sora possesses the capability to craft intricate scenes featuring multiple characters, diverse motion patterns, and precise details of both subject and background elements. Beyond merely interpreting user prompts, the model comprehends the nuances of these elements as they manifest in the real world, ensuring an unparalleled level of realism and accuracy.

Leveraging its profound comprehension of language, the model adeptly deciphers prompts and crafts captivating characters brimming with vivid emotions. Sora's prowess extends further as it seamlessly integrates multiple shots within a single video, ensuring consistency in character portrayal and visual aesthetics throughout.

The existing model exhibits certain limitations. It may encounter challenges in accurately simulating the intricate physics of complex scenes and could potentially overlook specific cause-and-effect relationships. For instance, while a person may be depicted taking a bite out of a cookie, the resulting absence of a bite mark on the cookie could be overlooked.

Additionally, spatial orientation within prompts may pose difficulties, leading to occasional confusion between left and right. Moreover, the model may encounter obstacles in providing precise descriptions of events unfolding over time, such as tracking a specific camera trajectory.

Sora employs a diffusion model, refining videos from static noise. It anticipates multiple frames, ensuring consistent subject representation. Utilizing a transformer architecture like GPT models, Sora represents visual data as patches, enhancing scalability. It incorporates recaptioning from DALL·E 3 for faithful user instruction interpretation. Sora can generate videos from text, animate still images accurately, and extend existing videos. This foundational model advances towards comprehending and simulating the real world, a crucial step towards Artificial General Intelligence (AGI). You can learn more about it here: https://openai.com/sora

Unfortunately, as of today, February 16, 2024, OpenAI's Sora text-to-video model is not publicly available for use. Currently, OpenAI is using it for internal research and testing, with no confirmed date for public release.

Limited Access: OpenAI initially shared Sora with "red teamers" (experts in areas like misinformation and bias) and select creative professionals for feedback.

Future Availability: OpenAI aims to share progress and potentially provide access in the future, but no concrete plans are announced.

Alternative Options: Some alternatives exist that offer text-to-image generation or animation, like Dream by WOMBO, NightCafe Creator, and DALL-E 2 (limited access). However, these don't yet create full videos like Sora. While directly using Sora isn't possible now, you can:

Stay Informed: Track OpenAI's blog and social media for updates on Sora's accessibility. Explore Alternatives: Experiment with the text-to-image options mentioned above to get a feel for the technology. Learn More: Read articles and watch videos about Sora's capabilities and potential (like the links I shared before). I hope this clarifies the current situation with Sora. Even though we can't use it yet, it's exciting to see its development and future possibilities.